Confirmation bias is a feature, not a bug

There’s a glass of sparkling water in front of you, but you think it’s a Sprite. You lift it up to your mouth, expecting to taste something sugary and lemony. When you take your first sip, you’re caught completely off guard and almost spit it out of your mouth. Your expectations did not match the inputs your brain received from your taste buds. You wanted a refreshingly cool drink, but all you got was surprise and disgust.

What we have here is a simple example of what cognitive scientists call Bayesian predictive processing. It’s a promising and powerful new way of understanding how the brain works. But it also suggests some inconvenient truths about the way we process information and news.

The Bayesian brain

What does it mean to say that the brain is a Bayesian predictive processor? Thomas Bayes was an 18th century statistician who came up with one of the most widespread theorems in the study of probability. Here’s a simple explanation of his idea, adopted from Investing: The Last Liberal Art:

Imagine that you and a friend have decided to make a wager: that with one roll of a die, you’ll get a 6. The odds are one in six, a 16% chance. But then suppose your friend rolls the die, quickly covers it with her hand, and takes a peek. “I can tell you this much,” she says; “it’s an even number.” Now you have new information and your odds change dramatically to one in three, a 33% chance. While you’re considering whether to change your bet, your friend adds: “And it’s not a 4.” With this additional bit of information, your odds have changed again, to one in two, a 50% probability. With this very simple example, you’ve performed a Bayesian analysis. Each new piece of information affected the original probability, and that’s a Bayesian inference.

The Bayesian approach to probability is relatively simple: take the odds of something happening and adjust for new information. Initial beliefs + recent objective data = a new and improved belief. This is in contrast to blind faith (i.e. beliefs that don’t change in the face of new evidence).

So how does this relate to the brain? Recent work in cognitive science suggests that the brain makes probabilistic guesses about what it’s about to experience and uses feedback about its errors to adjust predictions for the next time around. We expect solid objects to have backs that will come into view as we walk around them, doors to open, cups to hold liquid, and so forth. The brain works towards an equilibrium in which its environment is rendered predictable. These processes of prediction and error correction often happen below the level of consciousness. They’re encoded in hierarchical networks of neurons, and they influence how we perceive the world.

Surprise, surprise

What happens when the brain is surprised (i.e. when sensory inputs don’t match the brain’s predictions)? In general, the brain employs one of two strategies:

- It incorporates the unexpected information and updates its predictions to account for the error.

- It discards the unexpected information and constructs the external environment that it expects to encounter. In other words, you end up perceiving what you expect to find, rather than what’s actually there. This construction reenforces prior predictions, which creates an infinite loop of circular causality.

Let’s zoom in on strategy #2. Our brains have a predictive model of language based on everything we’ve ever read, written, spoken, and listened to. This probabilistic model affects our perceptual experience in ways that are beyond our control. You can experience this phenomenon for yourself by reading these words:

Your brain doesn’t care that the shape in the middle of the first word and the shape in the middle of the second word are identical. It expects to find the letter “H” between a “T” and an “E”, and the letter “A” between “C” and “T.” No matter how hard you try, you will always read this phrase as THE CAT. This is the power of Bayesian predictive processing. (We can predict that non-English readers would not experience this effect. They don’t have the same probabilistic models about which letters are likely to appear where). It takes a lot of effort to make yourself see the letter in the middle of the second word as an “H”. And when you stop concentrating, you’ll go back to experiencing it as an “A”. There’s no real sense in which you can force yourself to see it as an “H” for an extended period of time.

From experiments like this, we’ve learned that the the brain makes sense of the world by minimizing uncertainty and surprise. It constructs the external environment that it expects to encounter. This construction then gets bubbled up to the the level of consciousness. It’s what we experience as THE CAT rather than TAE CAT or THE CHT. Did you notice I’ve been repeating the word “the” multiple times in this paragraph?

Confirmation bias & optical illusions

THE CAT can help us make sense of confirmation bias (the tendency to search for, interpret, and recall information in a way that confirms pre-existing beliefs). When we talk about confirmation bias, we usually refer to high-level, abstract concepts like truth and news and misinformation. But THE CAT illusion (and the Bayesian model of the brain) reveal that confirmation bias is actually a side-effect of the brain’s subconscious prediction mechanisms. The mechanisms that control our most basic perceptual processes (vision, smell, touch) are the same ones that lead to confirmation bias. They’re a feature, not a bug. If the brain were not designed from the ground up to exhibit confirmation bias, we would always be surprised by the most commonplace experiences—when a stove is hot to the touch, when gravity causes objects to fall to the floor, when we run into a wall instead of walking through it.

When it comes to vision, smell, touch, hearing, and taste, the Bayesian model of prediction and error correction serves us quite well. We can pretty easily avoid getting burnt on the stove or slamming into a wall. But when it comes to forming and updating our beliefs, predictive processing can lead us astray.

Our belief systems are just as real and forceful as heat, gravity, and solid walls. We predict that they will continue to be true, just as we predict that stoves will continue to be hot. An optical illusion is to your visual system as opposing evidence is to your belief system. In Bayesian terms, optical illusions and opposing evidence generate prediction errors (surprise) that your brain must account for by either (1) incorporating the new evidence and updating its predictions, or (2) ignoring the new evidence and constructing the external environment that it expects to encounter.

THE CAT is like a belief. It’s what your brain expects to be true. It’s what you see instinctually. It’s what you usually don’t question because it appears obvious and self-evident. THE CHT and TAE CAT are the realities. It takes a lot of effort to notice that the realities differ from your beliefs. And even when you do, it’s easy to slip back into old patterns of seeing. If belief systems are anything like the visual system, we often perceive what we expect to see (without even realizing it). If you’re 100% convinced that Donald Trump is a terrible person and you see an article suggesting that he did something good for the world, you’re more likely to discount the source than to update your belief about Trump’s character.

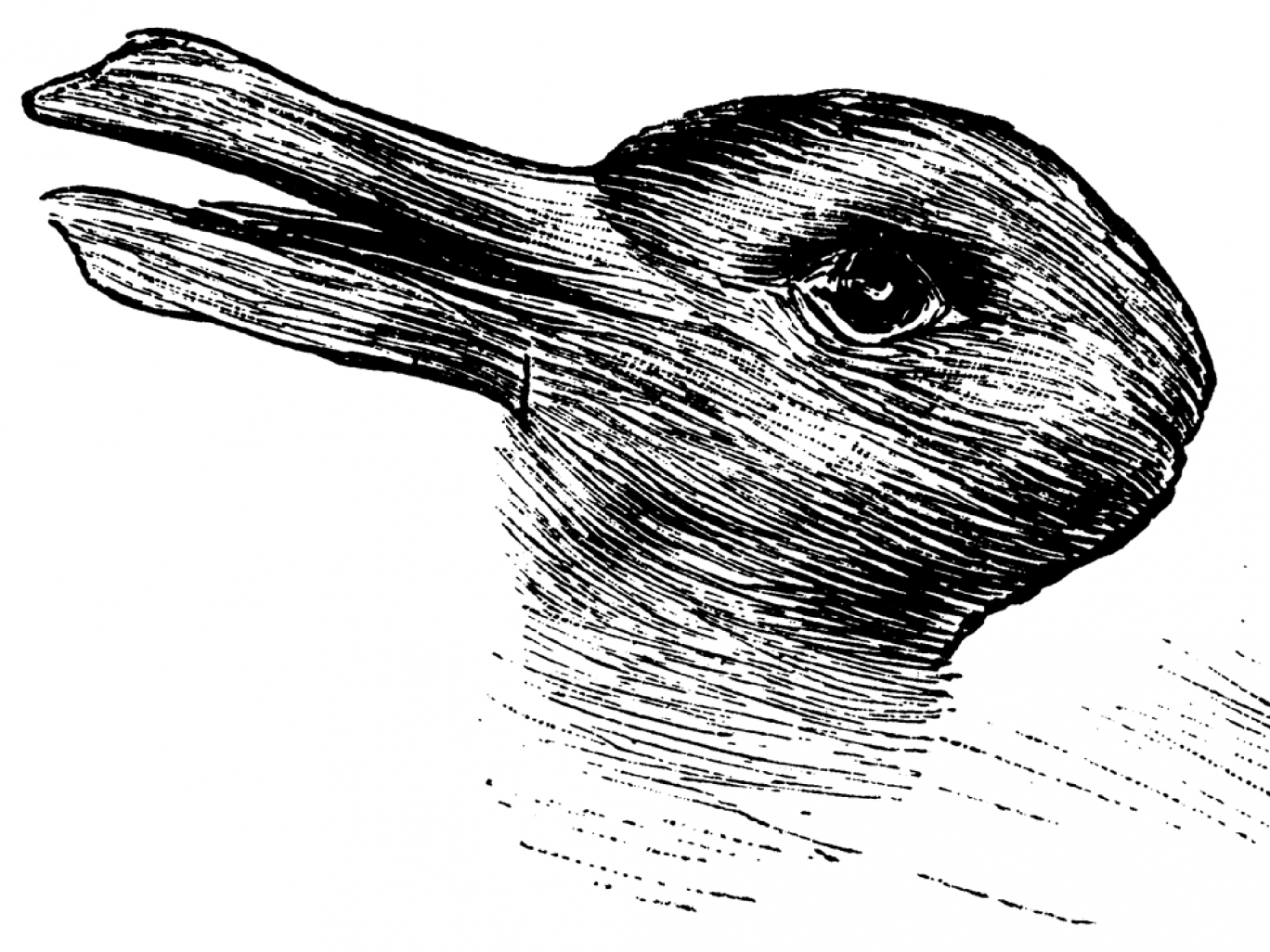

Not all optical illusions are created equally. There are some illusions in which awareness alters perception. For example, when I tell you that this image is both a duck and a rabbit, you’re able to see both if you look hard enough:

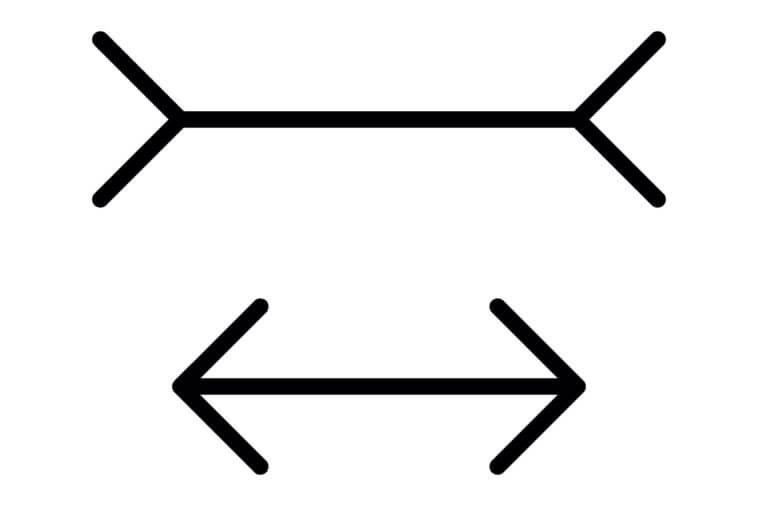

However, there are some optical illusions in which awareness is relatively useless. Sometimes the brain simply tricks you and projects the world it expects to encounter onto your consciousness. For example, no amount of awareness will convince your brain that these two lines are the same length (even though they are, in fact, identical).

I imagine that belief systems and optical illusions exist on a spectrum—some are more easily transformed by awareness than others. I hope to god that most of our belief systems are more like the duck/rabbit than the identical lines, but I fear that they’re not.

Measuring the lines

Our rhetoric around confirmation bias is broken because we treat it as a problem to be solved rather than a necessary tool for survival. We talk so much about bias training and “eliminating” bias, and we dream about how great the world would be if only everyone could get rid of their biases.

What are we to do when the enemy we’ve identified is actually the thing that makes us tick? It’s dangerous and almost nonsensical to pretend that mere awareness of confirmation bias will make us better consumers of information. It’s like saying that if you complete enough trainings on eliminating optical illusions, you’ll one day be able to really see the lines as identical. Perhaps the best we can do is develop a habit of measuring the lines (and surround ourselves with line-measurers)—especially when it seems obvious that they’re not the same length. Then again, I have a bias toward believing that confirmation bias is a problem that can indeed be solved.

Further reading

Book Review: Surfing Uncertainty—a deep dive on predictive processing models.

What is mood? A computational perspective—a new article that uses Bayesian predictive processing models to explain mood disorders like depression, mania, and anxiety.

Bayes’ rule: Guide—an introduction to Bayes’ theorem.

From Bacteria to Bach and Back: The Evolution of Minds—a wonderfully mind-blowing book from which I stole THE CAT illusion.